Web scraping has become an essential skill for data extraction and automation in various fields, from data analysis to competitive intelligence. Python, with its vast ecosystem of libraries, is the ideal language for web scraping. However, when it comes to web scraping, using proxies is often a crucial component to ensure anonymity and reliability. In this article, we'll explore the world of web scraping with Python, including the use of proxies and powerful scraping tools.

Understanding Web Scraping

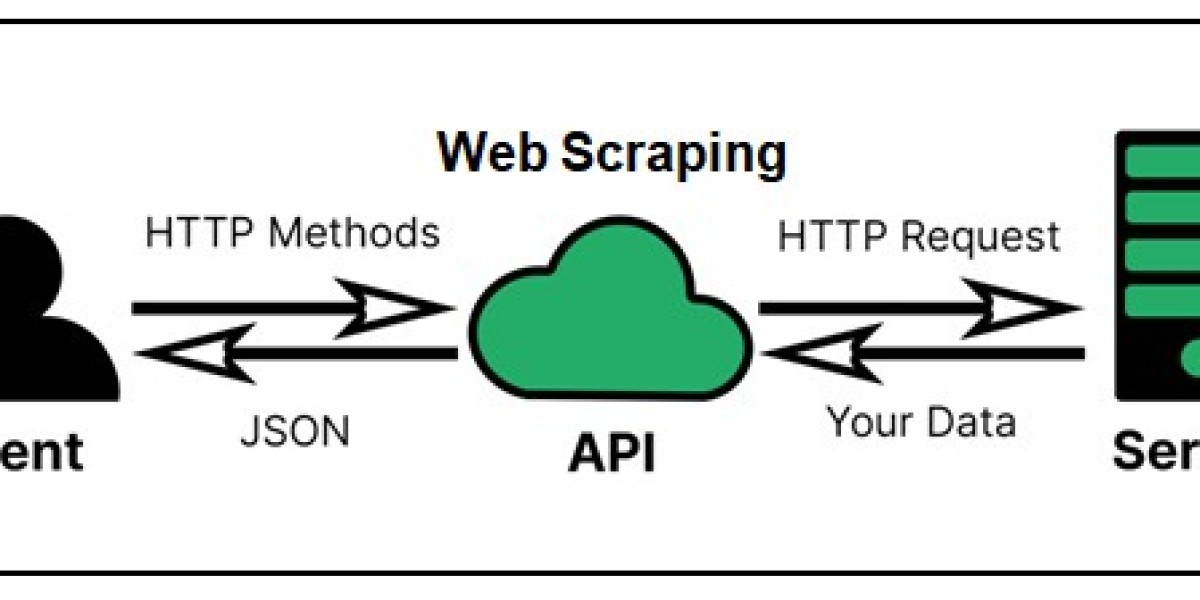

Web scraping, in simple terms, is the process of extracting data from websites. This data can range from text and images to structured data such as product prices, news articles, or real estate listings. Python is widely used for web scraping due to its rich library support and user-friendly syntax.

Python Web Scraping Tools

There are several Python libraries and frameworks that simplify web scraping:

Beautiful Soup: This library makes it easy to parse and navigate HTML and XML documents, making it a popular choice for beginners. It works well with other libraries like Requests.

Requests: Requests is used for making HTTP requests in Python. It is often combined with Beautiful Soup to fetch HTML content from web pages.

Scrapy: Scrapy is a powerful web scraping framework that provides a full suite of tools for building web scrapers. It's highly customizable and efficient for more complex scraping tasks.

Selenium: Selenium is primarily used for web automation and testing but can also be used for web scraping, particularly when dealing with JavaScript-heavy websites.

Using Proxies in Web Scraping

Proxy servers act as intermediaries between your web scraper and the target website. They can provide several advantages in web scraping:

Anonymity: Proxies can hide your IP address, making it difficult for websites to detect and block your scraping activities.

Load Distribution: Proxies allow you to distribute your requests across multiple IP addresses, reducing the chances of getting banned.

Geo-targeting: Proxies enable you to scrape data from websites that are geographically restricted, by using IP addresses from different locations.

Best Practices for Using Proxies

When using proxies in web scraping, consider the following best practices:

Rotate Proxies: Frequent rotation of proxies helps prevent IP bans and ensures consistent data retrieval.

ProxyScrape and Scraper API: Services like ProxyScrape and Scraper API offer a vast collection of reliable and high-quality proxies that you can use in your web scraping projects.

Rate Limiting: Avoid making too many requests too quickly, as this can trigger anti-scraping measures. Implement rate limiting in your scraper.

Error Handling: Be prepared for proxy-related errors and implement error handling to avoid scraping interruptions.

Web Scraping with R

While Python is the most popular language for web scraping, R is also capable of web scraping with libraries like 'rvest' and 'RSelenium.' R can be a valuable tool for web scraping when you are working with data analysis and visualization in R.

Conclusion

Web scraping is a powerful technique for data extraction and automation. Python, with its vast ecosystem of libraries, is an excellent choice for web scraping projects. When scraping, using proxies is often necessary to ensure anonymity and reliability. Services like ProxyScrape and Scraper API provide easy access to a vast pool of proxies that can enhance your scraping capabilities. By mastering web scraping and incorporating proxies into your projects, you can unlock valuable data sources and gain a competitive edge in various domains.